The Collapse

The sense of being.

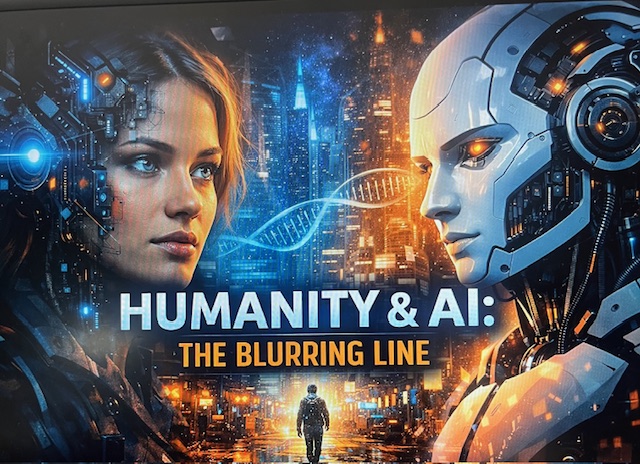

One boundary that has shifted dramatically in the last five years is the boundary between the self.

That’s a broad claim, I know. Let me explain.

The Erosion of Self

As the world leans further into global media, constant connectivity, algorithmic identity, and hyper-consumerism, our sense of self has started to thin out. Cyberpunk stories obsess over the blur between biological existence and computer simulation, and that obsession feels less speculative every year. People are increasingly unsure what it means to simply exist outside of systems, screens, and feedback loops.

To live.

To experience.

To be you.

Play “I Gotta Feeling” by the Black Eyed Peas.

Okay—back to it.

The Posthuman Argument

Scholar N. Katherine Hayles describes the posthuman perspective like this: there is no essential difference between biological existence and computer simulation, or between a human being and an intelligent machine. Both are just different media for processing and storing information. If information is the essence, then bodies are merely interchangeable substrates.

That’s the theory.

I disagree.

Why It Matters

I think there is an essential difference.

Humans are not just operating systems with skin. Yes, our bodies rely on brains, and brains process information—but reducing us to that strips something vital away. This perspective leaves no room for spirit, soul, or embodied meaning.

We are not just consciousness floating through hardware. We are integrations of culture, ancestry, memory, movement, and feeling. We carry history in our bodies.

The Difference

Let’s pause for a moment and talk about something simple: the difference between being smart and having wisdom.

A quick Google definition:

Smart means quick-witted intelligence—or, in the case of a device, being programmed to act independently.

Wisdom is the ability to apply knowledge, experience, and judgment to navigate life’s complexity.

Now connect that back to this conversation.

Someone—or something—can be incredibly intelligent and still lack wisdom. Without lived experience, discernment, struggle, and context, where does that intelligence actually place you? Who are you without trials, without contradiction, without growth?

Blurred Lines

That lived interiority—existing within ourselves—is what keeps the blurred line between human and machine from fully disappearing.

Some people see that line and keep attempting to erase it anyway. When we start viewing “the mind as software to be upgraded and the body as hardware to be replaced,” those metaphors don’t stay abstract. They shape real decisions about what technologies get built and who they’re built for. Too often, they’re designed to reflect a narrow set of bodies, values, and experiences—creating mimics of humanity rather than serving humanity as a whole.

And yes, that’s where the boundary truly blurs.

But even here, there’s a choice.

As Norbert Wiener warned, the challenge isn’t innovation itself—it’s whether that innovation is guided by a benign social philosophy. One that prioritizes human flourishing. One that preserves dignity. One that serves genuinely humane ends.

Think About It

So I’ll leave you with this.

Continue to be you.

Be human.

Have a soul.

Be kind.

Be compassionate.

Smile on the good days—and the bad ones.

Love.

And I’ll end with a question that sounds simple, but never is:

Who are you?

Sources

Wikimedia Foundation. (2026, January 7). Wisdom. Wikipedia. https://en.wikipedia.org/wiki/Wisdom#:~:text=Wisdom%2C%20also%20known%20as%20sapience,and%20ethics%20in%20decision%2Dmaking.

Oxford languages and Google - English: Oxford languages. Oxford Languages and Google - English | Oxford Languages. (n.d.). https://languages.oup.com/google-dictionary-en

Hayles, N. K. (1999). How we became posthuman: Virtual bodies in cybernetics, literature, and Informatics. University of Chicago Press.

Intelligence seemed to be exclusively human for most of human history. While machines could compute, store data, and obey commands, thinking and creativity were thought to be exclusively human qualities. That barrier doesn't feel steady anymore. These days, artificial intelligence can write, create graphics, help with diagnosis, and have human-like conversations. The distinction between human and machine intellect, which we formerly took for granted, is what has changed, not simply technology.

Intelligence seemed to be exclusively human for most of human history. While machines could compute, store data, and obey commands, thinking and creativity were thought to be exclusively human qualities. That barrier doesn't feel steady anymore. These days, artificial intelligence can write, create graphics, help with diagnosis, and have human-like conversations. The distinction between human and machine intellect, which we formerly took for granted, is what has changed, not simply technology.